UX Certification

Get hands-on practice in all the key areas of UX and prepare for the BCS Foundation Certificate.

I take a closer look at some unavoidable challenges to effective speech recognition, and I discuss why you may want to think twice before designing dialogue that is 'conversational' and 'natural'. I also offer five important questions that I think should form the basis of any VUI design kick-off meeting.

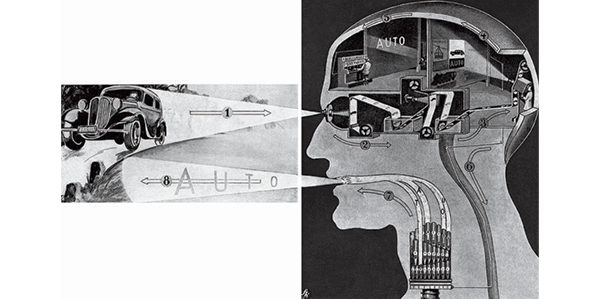

In Part 1 of this article I focused on how we produce speech and on some of the complexities inherent in the speech signal. In this part I discuss other obstacles to recognition accuracy and flag some user/talker behaviours that VUI designers should be aware of; and suggest ways to get your VUI development team thinking about how to minimise and recover from recognition errors.

Speech recognition systems struggle in noisy environments. This is not a surprise: human listeners struggle in noisy environments too. We particularly struggle to understand accented speech (an accent different to our own) in noise. Think of a friend or colleague with an accent different to yours, and the next time you're in a noisy restaurant or pub, notice how disproportionally difficult it is to understand what they are saying. Noise reduction techniques go some way to addressing this problem for speech recognition systems, either by canceling background noise (a technique not without cost as it can distort some of the speech cues and become, somewhat ironically, a form of speech cancellation), or by training speech recognizers in noisy environments.

Although noise is the pantomime villain, there are many other factors that make accurate speech recognition difficult. Faster or slower speech can push the temporal acoustic properties of an utterance beyond the limits of the system's templates; so too can speaking angrily or with frustration, or speaking too softly or too loudly, or speaking with extreme or unexpected intonation. Even deliberately speaking more clearly and precisely in a 'robotic' manner, to try and help recognition, can produce speech patterns that are less similar to the templates that were trained into the system, effectively defeating the exercise. Speech recognizers also struggle with older voices that may have less energy, and with people who have speech impairments, and with… female voices! Yes, really. It's a fact. Recognition accuracy for female voices can be as much as 13% lower than for male voices.

There are two main reasons why recognition performance is worse for females, and neither has to do with females talking too quietly or too loudly or too quickly or too anything else. In fact females generally speak more clearly and more distinctly than males. It's nothing to do with female talkers doing anything 'wrong'.

One reason, argues data scientist and linguist Rachael Tatman, is that training sets — the examples of speech used to teach speech recognition systems what to listen for — are biased towards male voices, with as much as 69% of the speech corpora used for training being male. It goes without saying that systems trained on male voices will not perform well when presented with female voices. So in this respect, yes, some speech recognition systems are gender biased.

The second reason has nothing to do with bias; it has to do with biology. Male and female voices are acoustically different, such that female voices present the speech recognizer with a less robust signal than do male voices. Here's why.

Female vocal cords are, on average, about 6 mm shorter than those of males. As a result, female vocal cords vibrate faster and produce a fundamental frequency that is about 80 Hz higher than males. The fundamental frequency is what we perceive as the pitch of a voice. In addition to the fundamental frequency, vibrating vocal cords create harmonics at integer multiples of the fundamental. Let's say a male voice has a fundamental frequency of 100 Hz. It will have harmonics at 200 Hz, 300 Hz, 400 Hz, 500 Hz, 600 Hz, 700 Hz, 800 Hz, 900 Hz, 1000 Hz, and so on (we can stop at 1000 Hz for this example, though in reality there is useful energy up to about 5000 Hz).

Now let's consider a female voice with a fundamental frequency of 200 Hz. It will have harmonics at 400 Hz, 600 Hz, 800 Hz, 1000 Hz and so on. Over the same range of frequencies there are fewer harmonics in the female voice than in the male voice (half as many in this example). This means that, relative to the male voice, the female voice presents the speech recognizer with less acoustic information and a less robust signal. The same decrement in performance, by the way, occurs with children's voices for the same reason — short vocal cords. The human brain has no problem with this. Speech recognizers are not there yet.

As we can see, the path to optimal speech recognition is littered with obstacles and challenges that present difficulties not only for recognition engines but also for VUI designers.

And then there's the paradox that I'll simply call 'the natural dialogue trap'.

Sociologist Sherry Turkle reminds us that, "Conversation is the most human and humanizing thing that we do." Conversation is natural, automatic and easy — and therein lies a problem.

The paradox is that the more natural and conversational the VUI dialogue, and the more 'free rein' is given to the user, the more likely the user will go 'off piste' and deviate from the parameters that the recognition algorithm can handle. In other words, the more the VUI designer tries to create a natural and conversational dialogue, the more liberties the user will take and this will increase recognition errors. Think of it as walking an overly energetic dog. Keep the dog on a leash and you can control the situation. Let it slip free and do what comes naturally and it'll run off and chase deer across Richmond park with you running after it shouting "Fenton! Fenton!! Fenton!!!". At that point 'error recovery' is just wishful thinking.

In their book The Media Equation, Byron Reeves and Clifford Nass describe how people treat computers (and other media devices) exactly as if they were real people. In a comprehensive series of experiments they showed that when we use media devices we (everyone, regardless of experience, education, age, tech-savviness, or culture) automatically treat computers as social actors (even as team mates in some circumstances) and attribute to the device human characteristics such as personality, intelligence, expertise, and even gender; and behave towards devices either politely or aggressively, with deference or with distain.

Why do we do this?

Old brains. Our brains have evolved over more than 200,000 years to socialize person-to-person in a world where interfaces are real physical objects. Given this behaviour towards computer devices it is inevitable that when explicitly invited to talk to a computer, we slip into natural conversation. We don't need prompting. It's what we do.

So, what does natural conversational dialogue involve? It rarely involves crystal clear diction or words spoken one at a time. Conversation is messy. It involves continuous speech that may be garbled or muttered or rushed or incoherent, filled with hesitations, ers, ums and ahs, sighs, coughs, throat clearing, self-corrections, backtracking, missing words, unexpected changes of direction and topic, slang, false starts, pauses, repetition, laughing, whispering and shouting. It's off the leash and running pell mell across Richmond park.

Can your speech recognition system handle this? Has your VUI been designed to anticipate recognition errors and quickly get the user back on track? Speech recognition is error prone in ways that more traditional interfaces are not. How can you get your team to think of the VUI as an error recovery system?

I feel as though the obvious next step is to present a detailed 'How to…' tutorial on VUI design. But I can't do it justice in a web article, and others have already done a great job. I recommend starting with Cathy Pearl's book Designing Voice User Interfaces which is comprehensive, practical and draws on her vast experience.

Instead, I'd like to take a slightly different approach and consider how a VUI design team leader might try and nudge a team's thinking away from a somewhat 'natural' and (some would say) a skeuomorphic approach to conversational design and towards a focus on task completion and error recovery.

When I lead a VUI project, my first step is to get the entire development team together, and ask them these five questions. I use the prompts to generate discussion and get everyone to think about where we're heading and about the challenges we'll face — before we get started.

Begin by challenging the basic premise that speech recognition is the answer to your question. Does anyone actually know what the question is? If not it's time for a rethink.

User input for VUI is far less predictable than it is for a traditional GUI where interactive elements are limited and well defined. Discoverability and setting expectations and constraints for first time users is particularly important.

Because speech is transient, staying in echoic memory for only about 3 seconds, voice interfaces can impose a high cognitive load on the user. Speech draws heavily on both attention and memory. If the user is distracted or multitasking we face the possibility, not just of the system failing to recognize the user's speech, but of the user failing to recognize the system's speech.

Prioritize error recovery. Resolve recognition errors as quickly as possible. Don't allow errors to trigger an unrecoverable death spiral. Have a safety net. Remember the two Scotsmen we left stuck in the lift in Part 1 of this article? Can anyone remember what their first question was? "Where are the buttons?" If your application is mission-critical, or if system failure could compromise the safety of the user, don't offer VUI as the only interface..

VUI design in consumer products and customer-facing systems is new ground for UX. So, what is good UX for VUI design? How do we create an experience that is task focused, minimizes errors, and contains a saftey net to catch the user when they fall? How do we build trust?

Speech recognition feels new — but it isn't. What's new is its current ubiquity in the consumer market. This is a good thing. It means that speech recognition gets to be tested and improved in the white heat of competition. But the reality is that academic and industry speech labs around the world have been sweating over human speech perception and automatic speech recognition for 70 years. And this gives rise to a final question: Should your team hire a speech expert?

If speech-enabled systems are your main focus then yes, you should. If your speech-enabled system is a one-off, or an infrequent venture, you should hire a speech expert in a consulting capacity. Select from candidates with qualifications in the speech and hearing sciences or the human communication sciences. If you're dealing with speech you should understand speech, language and conversation, not just software and technology.

These are exciting times for voice technology and for speech recognition buffs, but we can't afford to rush ahead with technology innovations while treating our users as an afterthought. Speech communication begins and ends with humans not with computers. This has three corollaries:

Get it wrong and users will abandon your product in a heartbeat. Get it right and you'll leave them speechless.

-oOo-

Gain hands-on practice in all the key areas of UX while you prepare for the BCS Foundation Certificate in User Experience. More details

This article is tagged guidelines.

Our most recent videos

Our most recent articles

Let us help you create great customer experiences.

We run regular training courses in usability and UX.

Join our community of UX professionals who get their user experience training from Userfocus. See our curriculum.

copyright © Userfocus 2021.

Get hands-on practice in all the key areas of UX and prepare for the BCS Foundation Certificate.

We can tailor our user research and design courses to address the specific issues facing your development team.

Users don't always know what they want and their opinions can be unreliable — so we help you get behind your users' behaviour.